Server-less Architectures

Deploying Modern Web Applications with Serverless Architectures (Revised)

Today, we’re diving into serverless architectures, focusing on Lambda functions and edge functions, to make our blogging app shine online for millions of users. Why serverless? It lets you focus on coding, not server management, and scales automatically. Imagine your blog goes viral—thousands of users flood in. A traditional server might crash, but serverless handles it like a champ. Sounds perfect, right? Well, not so fast. Some students think serverless is a one-size-fits-all fix. Spoiler: it’s powerful but has quirks, like tricky debugging or unexpected costs. Let’s explore how to use it wisely, with clear examples and pitfalls to avoid!

Section 1: Serverless Basics

What is Serverless?

Serverless doesn’t mean “no servers”—servers exist, but providers like AWS, Vercel, or Cloudflare manage them. You deploy code, and they handle scaling and maintenance. For our blogging app, this means running an API to fetch posts without renting a server.

How It Works

Your app is split into small functions that only run when triggered (e.g., a user requests /posts?tag=tech). Consequently, the server is off when no one is using your app and you are saving money. These functions are stateless, so you store data in databases like DynamoDB or Redis.

⚠️ If you deploy your app to Vercel, the application automatically runs as serverless.

Advantages

- Pay-as-You-Go: Only pay for what you use.

- Auto-Scaling: Handles any traffic spike.

- No Server Hassle: Forget patching or updates.

Disadvantages

- Cold Starts: Functions may lag slightly when starting.

- Debugging: Tracing issues across functions is tough.

- Lock-In: Switching providers can be a headache.

Misconception Alert

Many think serverless is “always cheap” or “plug-and-play.” Nope! Poorly designed functions can rack up costs, and setup takes planning. Let’s see how to do it right.

Section 2: AWS Lambda Functions for Backend Power

What Are Lambda Functions?

AWS Lambda lets you run small, single-purpose functions triggered by events, like HTTP requests. For our blogging app, a Lambda function could fetch posts from DynamoDB when users hit /api/posts.

How They Work

- Write a function (e.g., in Node.js).

- Deploy to AWS Lambda.

- Set a trigger (e.g., API Gateway for HTTP).

- AWS scales it automatically.

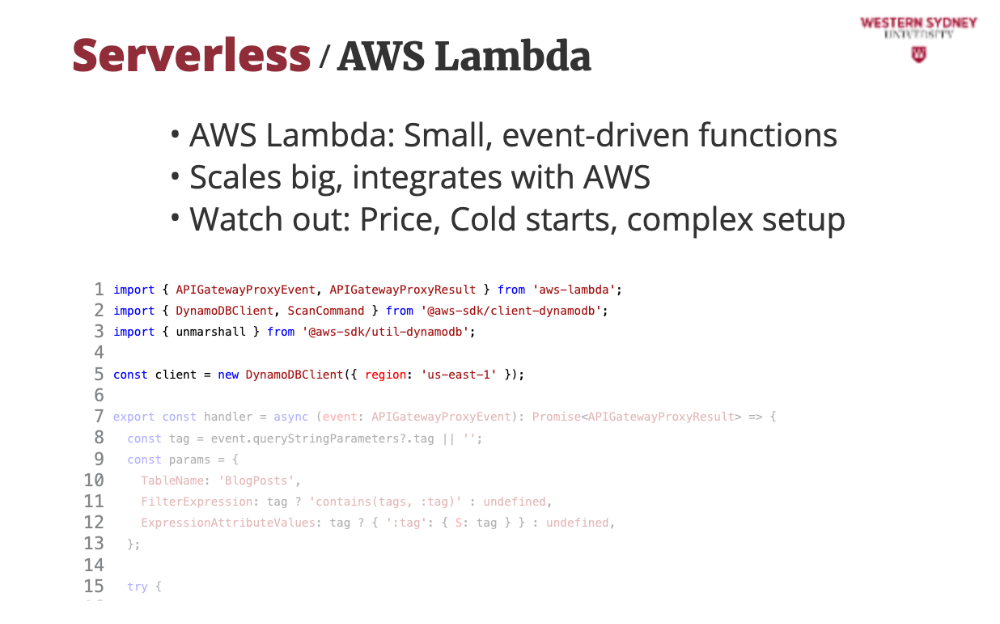

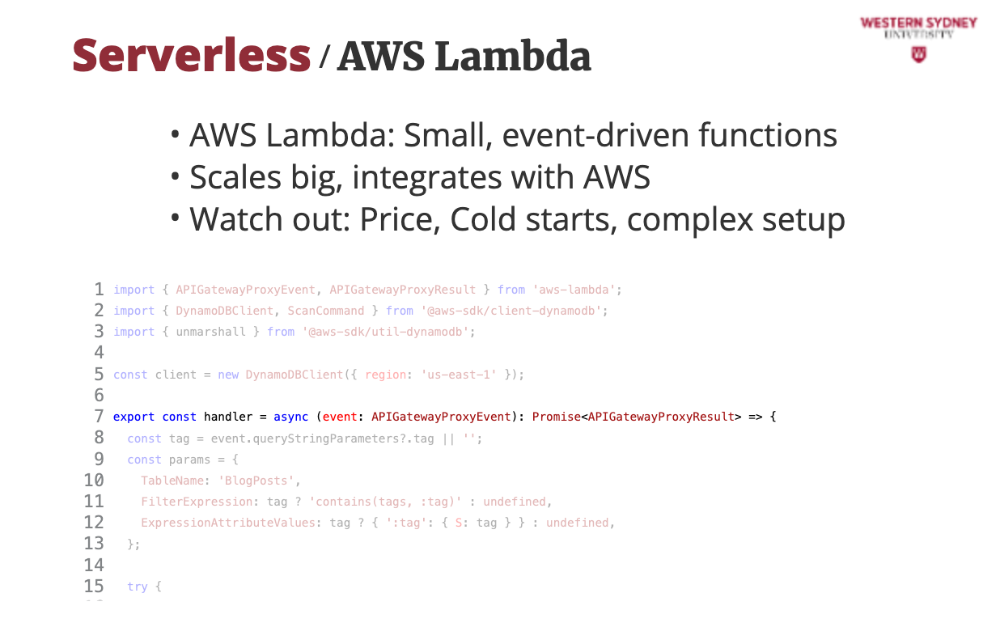

Example: Fetching Posts

Here’s a Lambda function for /api/posts?tag=tech:

const AWS = require('aws-sdk');

const dynamoDB = new AWS.DynamoDB.DocumentClient();

exports.handler = async (event) => {

const tag = event.queryStringParameters?.tag || '';

const params = {

TableName: 'BlogPosts',

FilterExpression: tag ? 'contains(tags, :tag)' : undefined,

ExpressionAttributeValues: tag ? { ':tag': tag } : undefined,

};

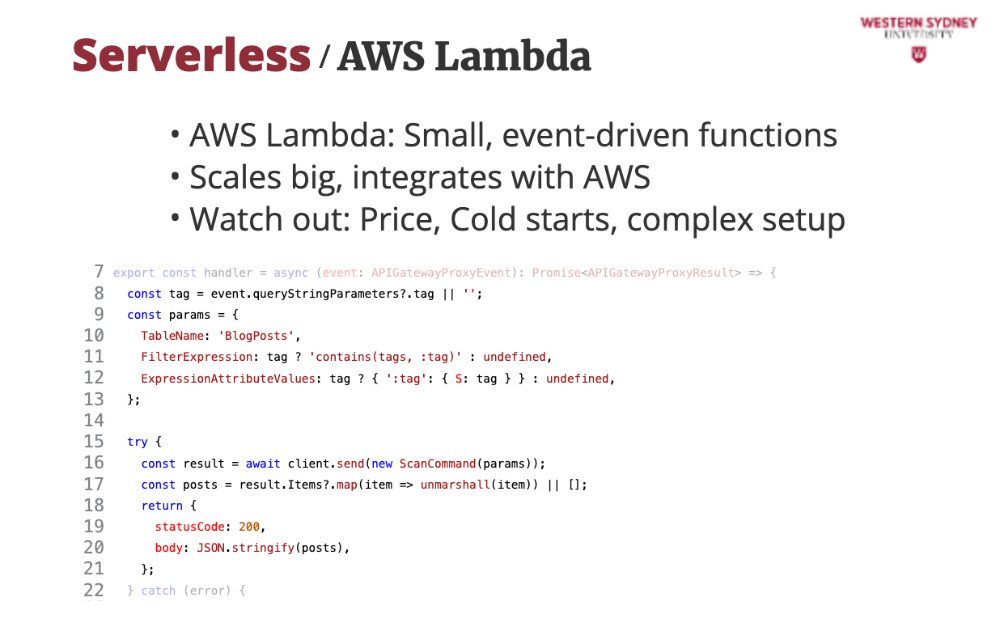

try {

const data = await dynamoDB.scan(params).promise();

return {

statusCode: 200,

body: JSON.stringify(data.Items),

};

} catch (error) {

return {

statusCode: 500,

body: JSON.stringify({ error: 'Failed to fetch posts' }),

};

}

};

This queries DynamoDB for posts, filtering by tag if provided.

Deploying Lambda

- Package the code (e.g., ZIP file).

- Upload via AWS Console or CLI:

aws lambda create-function --function-name GetPosts .... - Link to API Gateway for HTTP access.

- Set IAM roles for DynamoDB.

Advantages

- Scales effortlessly.

- Deep AWS integration.

- Precise billing.

Disadvantages

- Cold starts (100-500ms).

- Complex setup (IAM, Gateway).

- Costly for constant traffic.

Pitfall

Don’t skimp on memory allocation—128MB is cheap but slow. Test 512MB or 1024MB for speed, but watch costs.

Section 3: Edge Functions for Speed and Clarity

What Are Edge Functions?

Edge functions run on Content Delivery Networks (CDNs) at locations close to users, minimising latency. They’re great for lightweight tasks like rewriting URLs or personalising content in our blogging app. Unlike Lambda, they run in V8 isolated, not full Node.js, so they’re leaner but less powerful.

How They Work

- Write a JavaScript function.

- Deploy to a provider like Vercel or Cloudflare.

- The provider runs it at edge nodes (e.g., Tokyo, London) when triggered.

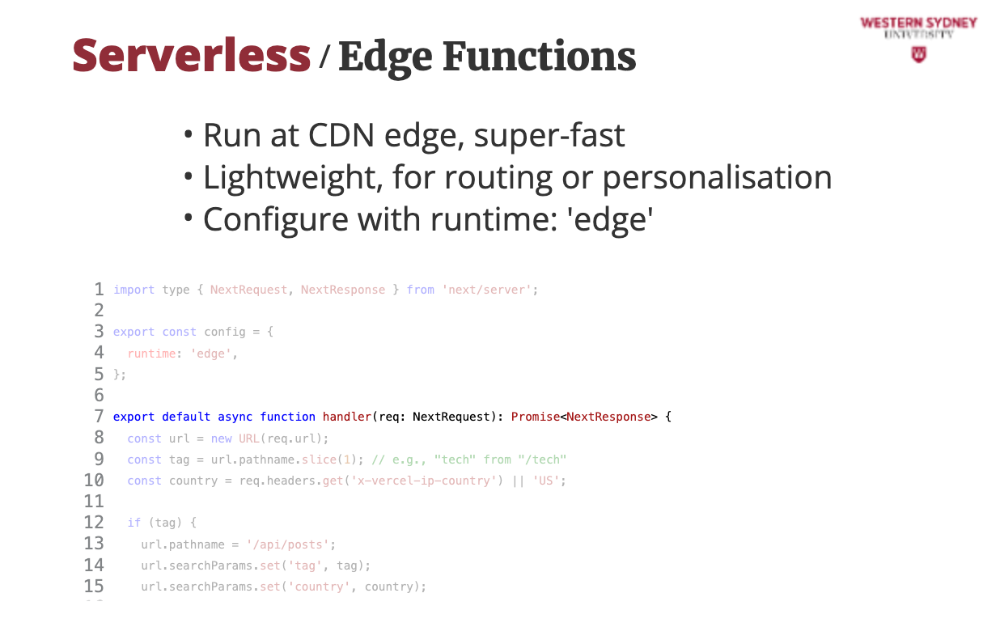

Configuring an Edge Function

Let’s configure an edge function to rewrite /tech to /api/posts?tag=tech. Here’s how to set it up with Vercel, step-by-step:

Step 1: Write the Function

Create a file in your Next.js project at/api/edge.ts:import type { NextRequest } from 'next/server'; export const config = { runtime: 'edge', // <- This tells Vercel to run it as an edge function }; export default async function handler(req: NextRequest) { const url = new URL(req.url); const tag = url.pathname.slice(1); // e.g., "tech" from "/tech" if (tag) { url.pathname = '/api/posts'; url.searchParams.set('tag', tag); return fetch(url); } return new Response('Invalid tag', { status: 400 }); }The

runtime: 'edge'line is critical—it instructs Vercel to deploy this as an edge function, not a Lambda or server function. Without it, Vercel might default to Node.js, losing edge benefits.- Step 2: Deploy

Runvercel deployfrom your project root. Vercel detects theedgeruntime and distributes the function to its global CDN. - Step 3: Test

Visithttps://your-app.vercel.app/tech. The function rewrites the URL and fetches tech-tagged posts. - Step 4: Verify Edge Execution

Check response headers (e.g.,x-vercel-edge-region) to confirm it ran at an edge node likeiad1(Virginia) orhnd1(Tokyo).

Why Configure as Edge?

The runtime: 'edge' setting ensures the function runs on Vercel’s CDN, reducing latency to <50ms for users worldwide. Without this, it might run as a serverless function in a single region (e.g., us-east-1), adding 100-200ms for distant users.

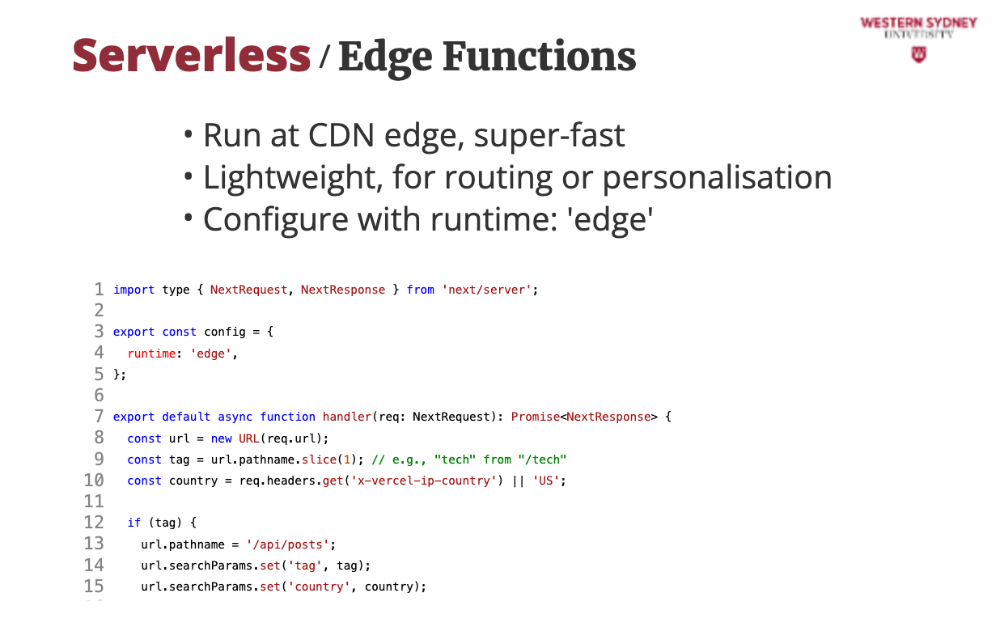

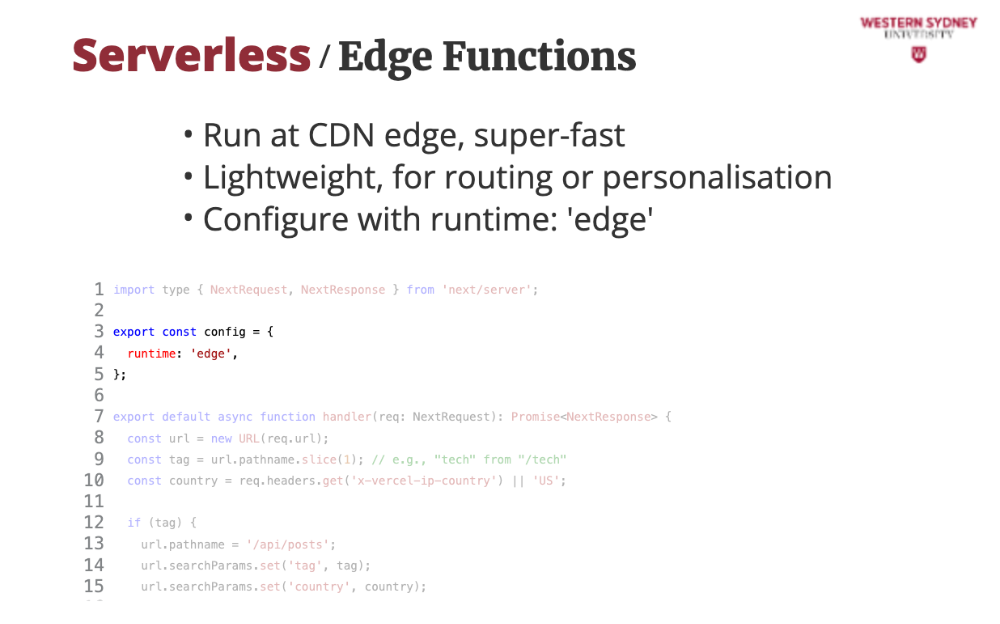

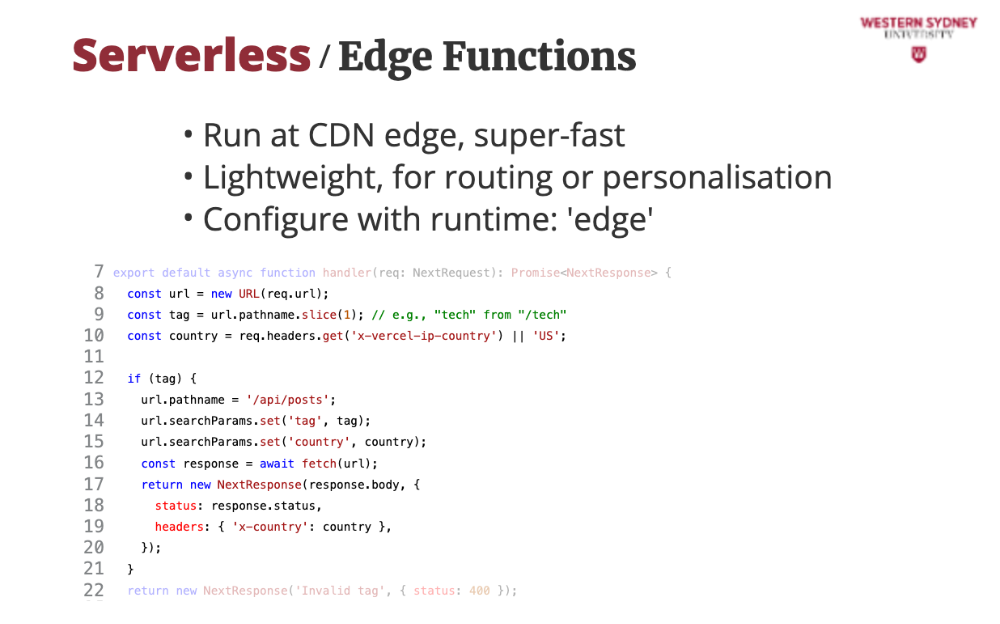

Advanced Example: Personalization

Let’s add user location-based content:

import type { NextRequest } from 'next/server';

export const config = {

runtime: 'edge',

};

export default async function handler(req: NextRequest) {

const url = new URL(req.url);

const tag = url.pathname.slice(1);

const country = req.headers.get('x-vercel-ip-country') || 'US';

if (tag) {

url.pathname = '/api/posts';

url.searchParams.set('tag', tag);

url.searchParams.set('country', country);

const response = await fetch(url);

return new Response(response.body, {

headers: { 'x-country': country },

});

}

return new Response('Invalid tag', { status: 400 });

}

This uses the user’s country to customise posts, running at the edge for speed.

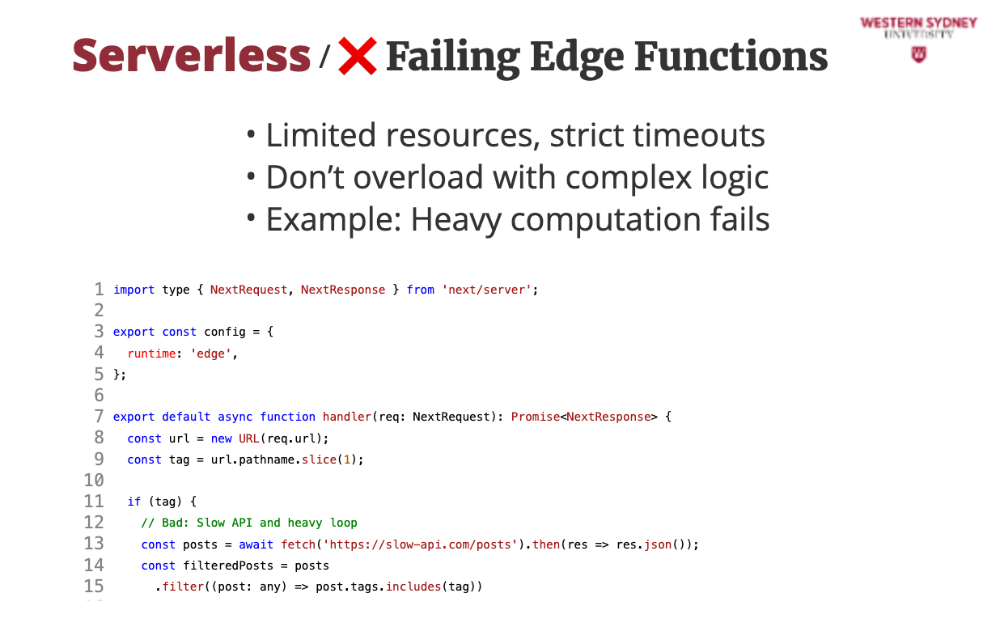

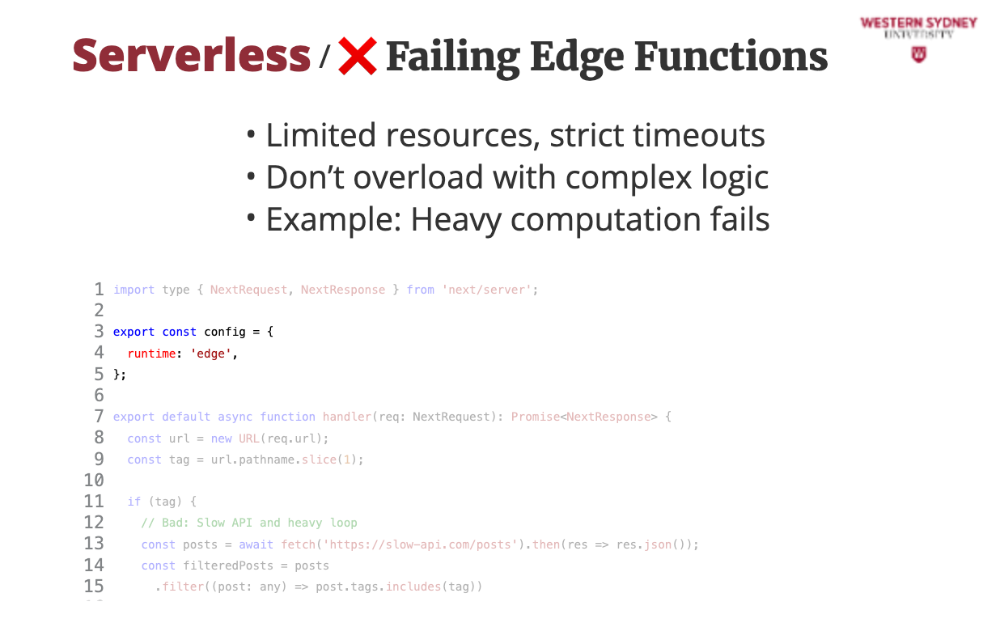

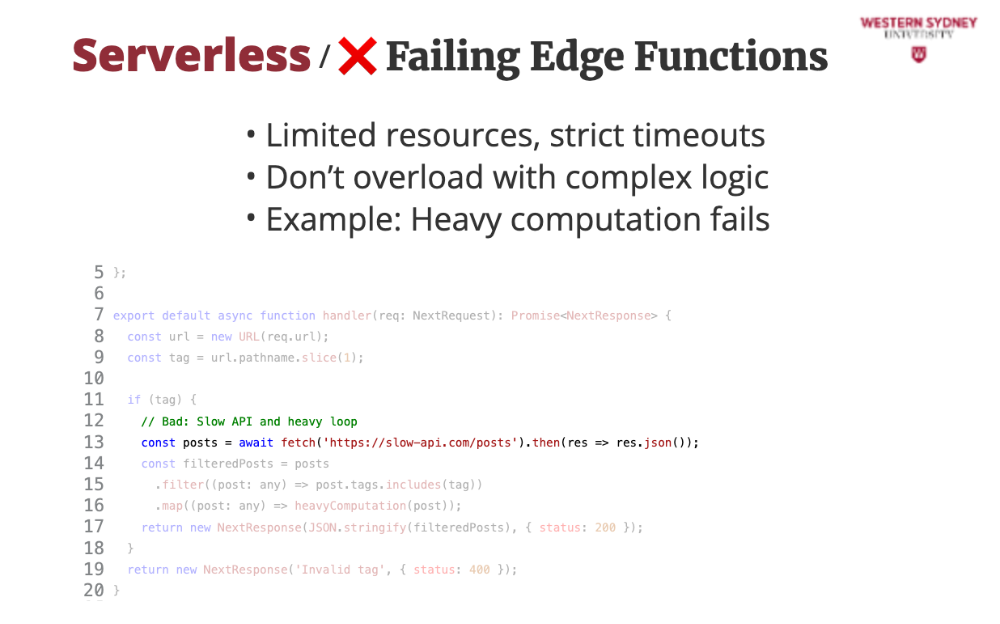

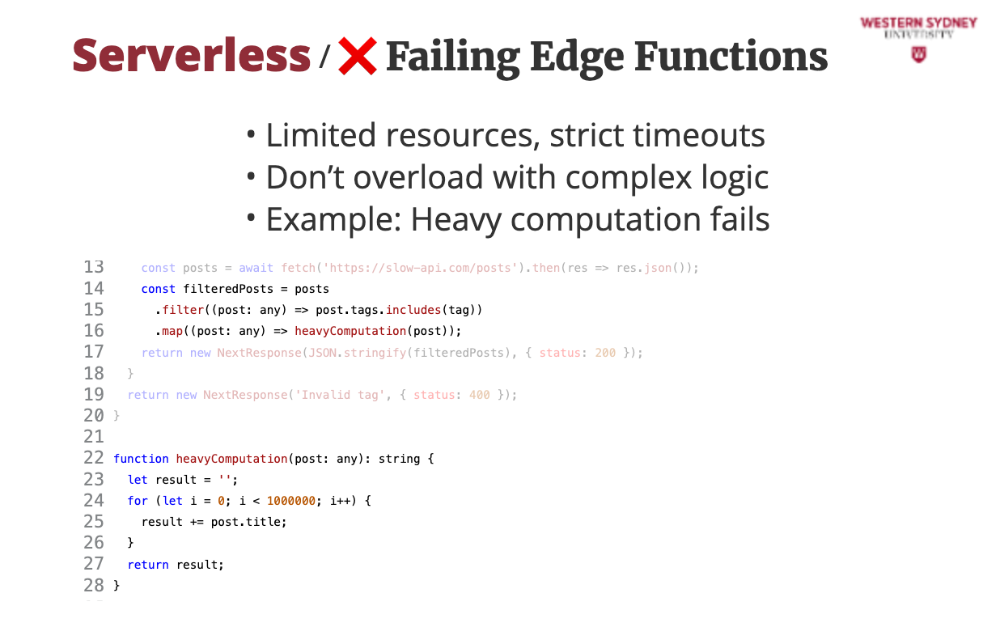

When Edge Functions Fail

Edge functions are lightweight, so they can fail if misused. Let’s see an example where our blogging app’s edge function tries to do too much:

import type { NextRequest } from 'next/server';

export const config = {

runtime: 'edge',

};

export default async function handler(req: NextRequest) {

const url = new URL(req.url);

const tag = url.pathname.slice(1);

if (tag) {

// Bad idea: Heavy computation

const posts = await fetch('https://slow-api.com/posts').then(res => res.json());

const filteredPosts = posts

.filter(post => post.tags.includes(tag))

.map(post => heavyComputation(post)); // Simulate CPU-intensive task

return new Response(JSON.stringify(filteredPosts), { status: 200 });

}

return new Response('Invalid tag', { status: 400 });

}

function heavyComputation(post: any) {

// Simulate complex processing

let result = '';

for (let i = 0; i < 1000000; i++) {

result += post.title;

}

return result;

}

Why It Fails:

- Timeout: Edge functions have strict limits (e.g., 1-5 seconds on Vercel). The slow API call and heavy loop exceed this, causing a timeout.

- Resource Constraints: Edge runtimes lack the CPU/memory for intensive tasks.

- Result: Users see a 504 Gateway Timeout or an error like “Execution timed out.”

Fix: Move heavy logic to a Lambda function and use the edge function only for routing or light transformations. For example, rewrite to a Lambda endpoint instead of processing posts directly.

Advantages

- Lightning-fast (<50ms).

- Global distribution.

- Simple for front-end tasks.

Disadvantages

- Limited to lightweight logic.

- Provider-specific APIs.

- Hard to debug globally.

Pitfall

Don’t use edge functions for database queries or CPU-heavy tasks. They’ll timeout or crash. Stick to routing, headers, or caching.

Section 4: Deploying a Next.js App as Lambda

Why Next.js with Lambda?

Our blogging app is built with Next.js, which supports serverless deployment out of the box. Deploying it as Lambda functions lets each route (e.g., /posts, /admin) run independently, scaling efficiently. Vercel and AWS both support this, but let’s focus on AWS for full control.

How It Works

Next.js generates serverless functions for each page or API route. You package these as Lambda functions, with API Gateway or an Application Load Balancer routing requests. Static assets (e.g., images, CSS) go to S3, served via CloudFront.

Step-by-Step Deployment

Let’s deploy our blogging app to AWS Lambda:

Configure Next.js for Serverless

Innext.config.js, set the output to serverless:module.exports = { output: 'serverless', };This tells Next.js to generate functions compatible with Lambda.

- Build the App

Runnext build. This creates a.nextfolder with serverless functions (e.g.,pages/posts.jsbecomes a Lambda-compatible bundle). Package for Lambda

Use a tool likeserverlessor@sls-next/serverless-component. Here’s an example with the Serverless Framework:

Create aserverless.yml:service: blog-app provider: name: aws runtime: nodejs18.x functions: default: handler: .next/server/pages/_app.handler events: - http: ANY / - http: ANY /{proxy+} plugins: - serverless-nextjs-pluginThis defines a Lambda function for all routes, routing via API Gateway.

- Deploy

Install dependencies:npm install serverless serverless-nextjs-plugin.

Run:serverless deploy.

The tool uploads functions to Lambda, static files to S3, and sets up CloudFront. - Test

Visit the CloudFront URL (e.g.,https://d123456789.cloudfront.net/posts?tag=tech). You’ll see your app live, with each page running as a Lambda function.

Example: Blogging App Routes

- Public Route:

/posts?tag=techtriggers a Lambda function rendering the posts page, querying DynamoDB. - Admin Route:

/admintriggers another Lambda for the admin dashboard, secured with IAM.

Each function is independent, scaling based on demand.

Advantages

- Scales per route.

- Integrates with AWS ecosystem.

- Cost-effective for dynamic pages.

Disadvantages

- Cold starts per function.

- Complex setup vs. Vercel.

- Static assets need separate handling.

Pitfall

Don’t forget to optimise cold starts. Use tools like serverless-plugin-warmup to keep functions “warm” by pinging them periodically. Also, ensure S3 and CloudFront are configured correctly, or static files (e.g., images) won’t load.

Alternative: Vercel Serverless

Vercel can deploy Next.js as Lambda-like functions automatically:

- Push to Git:

git push. - Vercel builds and deploys, splitting pages into functions.

- No manual AWS setup, but less control.

For simplicity, students might start here, but AWS teaches you the nuts and bolts.

Section 5: Choosing the Right Tool

Lambda Functions

- Use for: Backend APIs, admin tasks, heavy logic.

- Example:

/api/postsquerying DynamoDB. - Provider: AWS, Azure.

Edge Functions

- Use for: URL rewrites, personalization, caching.

- Example: Rewriting

/techto/api/posts?tag=tech. - Provider: Vercel, Cloudflare.

Next.js as Lambda

- Use for: Full-stack apps with dynamic routes.

- Example: Entire blogging app, with pages as functions.

- Provider: AWS, Vercel.

Hybrid Approach

Use edge functions for user-facing tweaks, Lambda for backend, and Next.js as Lambda for the app. For our blog: edge functions rewrite URLs, Lambda handles APIs, and Next.js serves pages.

Key Takeaways

We’ve unpacked serverless deployment for our blogging app, covering Lambda functions, edge functions, and Next.js as Lambda. Here’s the gist:

- Lambda: Perfect for backend power but needs careful setup and cold start management.

- Edge Functions: Super-fast for front-end tasks, but keep them light to avoid timeouts (like our failed heavy-computation example).

- Next.js as Lambda: Scales entire apps, with AWS for control or Vercel for ease.

Best Practices

- Configure edge functions explicitly (e.g.,

runtime: 'edge') for speed. - Deploy Next.js with tools like Serverless Framework for AWS Lambda.

- Monitor costs—serverless isn’t free if misused.

Pitfalls to Avoid

- Don’t overload edge functions; they’ll crash under heavy logic.

- Optimize Lambda memory and warmup to reduce latency.

- Test Next.js deployments thoroughly—misconfigured S3 breaks assets.

You’re now ready to deploy scalable apps! Try pushing our blogging app to Vercel or AWS, and next time, we’ll explore monitoring to keep it running smoothly. Keep coding, and let’s make the internet a better place

Slides

Imagine your blogging app goes viral—thousands of users flood in! Deployment makes it happen, and serverless is the key: no server hassle, auto-scaling magic. But is it flawless? Join us to explore Lambda and edge functions, uncover pitfalls, and deploy our app like pros! Ready to launch?

Imagine your blogging app goes viral—thousands flood in! A viral post could crash a traditional server, but serverless deployments scale really well and make your application shine!

Serverless lets you deploy without managing servers. Providers like AWS or Vercel handle scaling, so your app stays fast and reliable, even under heavy traffic. More users? No problem, they spin up another server for you!

Today, we'll show you how to deploy our app using AWS Lambda for backend power, edge functions for speed, and Next.js as Lambda for the full stack. Get ready to launch!

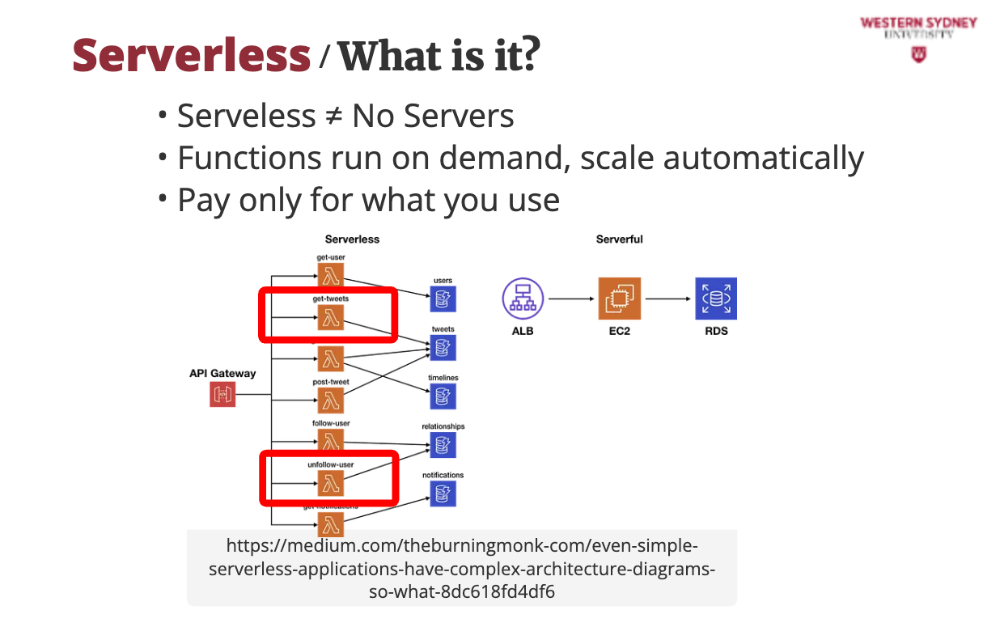

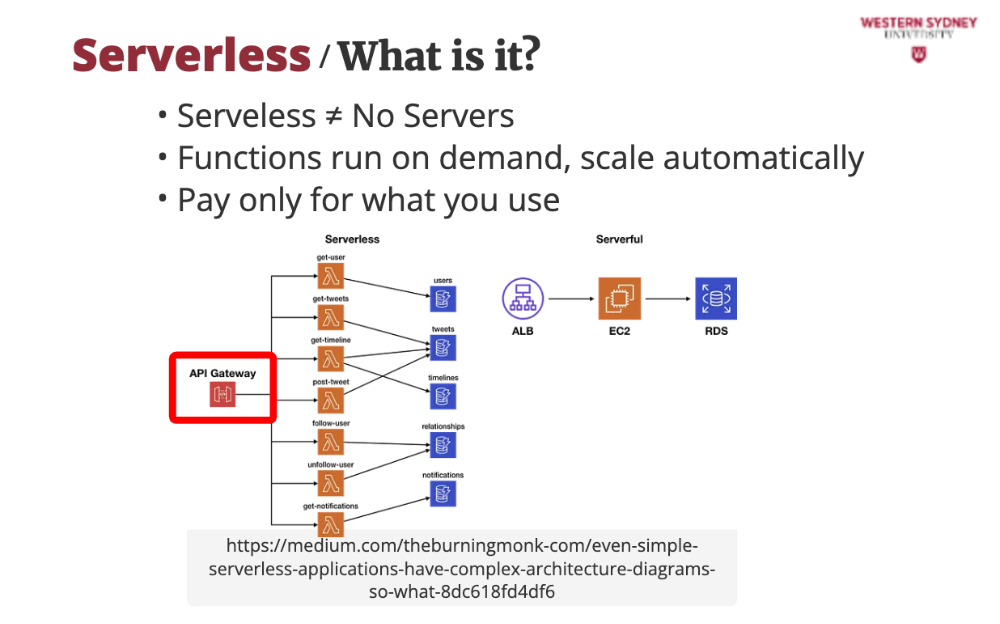

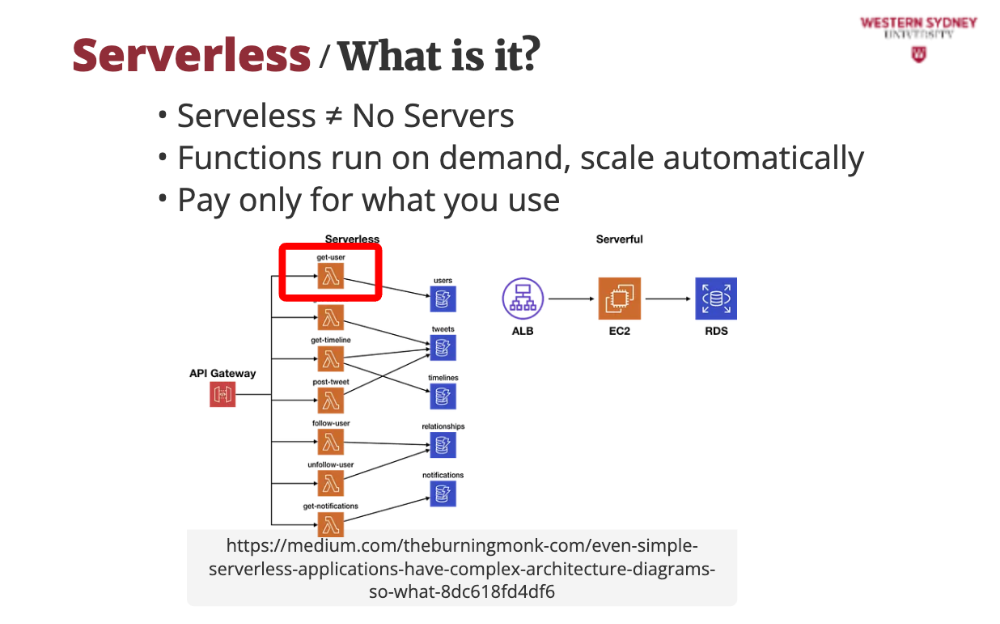

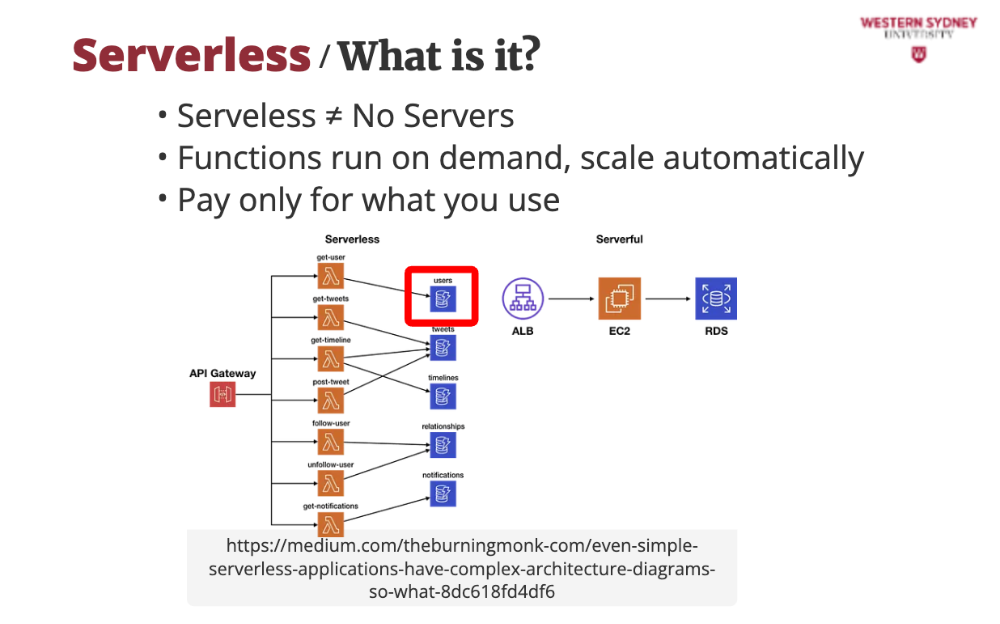

Serverless doesn’t eliminate servers; AWS, Vercel, or Cloudflare run them for you. You focus on code; they handle scaling and maintenance.

Your app splits into small functions—like fetching posts—that run only when needed, scaling from 10 to 10,000 users seamlessly. More users? No problem, new server is launched to execute your function!

No traffic? No cost! You’re billed only for function execution time, which is perfect for startups like our blog.

This diagram shows a user hitting our blog’s /api/tweets. The request goes through API Gateway ...

... triggers a Lambda function by loading your application and launching only the related function code ....

... fetches tweets from the database and returns them to the user. Serverless ties it together, scaling each piece automatically. Serverless is a game-changer! Providers manage servers, so you write functions that run on demand—like fetching posts for our blog. You pay only for usage, and scaling’s automatic. But it’s not magic; let’s see how it powers our app!

Amazon Web Services introduced lambda functions as the main driver behind their serverless architectures. You can find similar solutions in Microsoft Azure Cloud or other cloud providers. For simplicity, we will focus on AWS. AWS Lambda runs single-purpose code, like querying posts, triggered by HTTP requests or database changes. It’s the backbone of our blog’s API.

From one user to millions, Lambda scales instantly and connects to DynamoDB, S3, and more for robust features.

The scaling is almost limitless concerning the number of potential users of your app. But the issue is the cost. If suddenly you rack up thousands of users, you may end up with a huge bill! Apart from cost, the other issue is the cold start, when the function may lag briefly when idle. Also, setting up IAM roles or API Gateway can feel overwhelming at first.

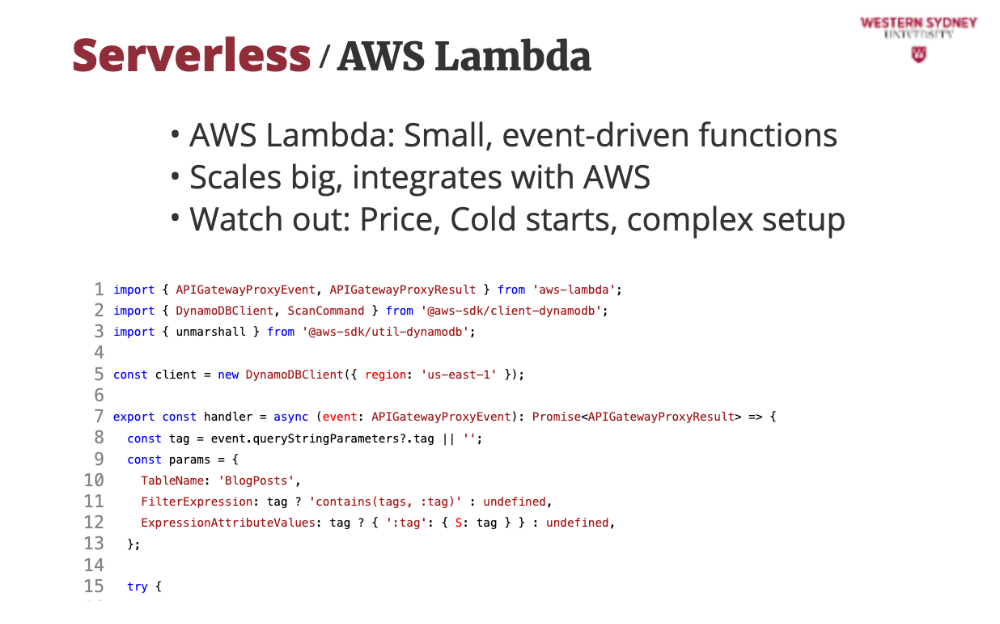

Check out this Lambda function that powers our /api/posts endpoint. It queries DynamoDB, a database for posts, filtering by tag, and scales automatically. Each line builds a robust API, handling user requests with type-safe TypeScript and error handling, perfect for our blog’s backend. And if you are curious what DynamoDB is, it's a database from AWS optimised for serverless access and cross-region replication, so your data is always close to your users.

In this lambda function we import the necessary packages that will allow us to connect to the DynamoDB in a particular region.

The lambda function ... is a function, that receives parameters containing information about the request coming from a user

In the body of this function we connect to the database and return the required data ... not much magic there.

Edge functions are a super fast alternative to serverless lambda functions, this time supported by Vercel deployments. Edge functions execute close to users—Tokyo, London, anywhere—cutting latency to under 50ms for snappy blog responses.

They are perfect for rewriting URLs or tweaking headers, they keep our blog’s front-end zippy without heavy logic.

In Vercel, all you need to do is to set runtime: 'edge', which deploys functions to the CDN, ensuring global speed for our blog.

This edge function rewrites /tech to /api/posts?tag=tech, adding country-based personalisation. It will be deployed globally via runtime: 'edge', it ensures users get fast, tailored responses. It’s lightweight, keeping our blog’s front-end agile.

Setting runtime: 'edge' ensures global deployment, making our blog’s URLs fast for all users.

Similarly to lambda functions, an edge function accepts parameters which contain information about user request.

In this edge function, we extract tag and country and personalise content, enhancing user experience without delay. We rewrite the URL to more complicated version, which helps to keep URLs clean.

Edge functions aren’t invincible! We will show you a failed example that timeouts from heavy logic. Stick to lightweight tasks like rewriting URLs, or users will see errors. Let’s move to deploying our full app next!

Edge functions can’t handle heavy tasks; they timeout after 1-5 seconds, unlike Lambda’s flexibility.

Trying to process posts or query databases directly will crash, slowing our blog’s users.

Let’s see a mistake that breaks our blog’s edge function, teaching us what to avoid. This edge function tries filtering posts directly, but it’s a disaster! The slow API and heavy loop exceed edge limits, timing out. Instead, it should rewrite to a Lambda function, keeping our blog fast and teaching us to keep edge logic light.

runtime: 'edge' is correct, but the logic below misuses it, harming performance.

Fetching from a slow API delays users, risking timeouts on edge’s tight limits.

Also, heavy computations such as this filtering overload the runtime, crashing the function.

So, how do we deploy our serverless functions?

On Vercel, your NextJS app is automatically represented as serverless, no configuration needed.

On AWS, you will have to configure your resources, which can be daunting at first, but price is more reasonable than Vercel. Check out the description of this lecture for an example.

Other providers such as Azure will require also configuration and you can find information about it on their respective websites.

Let's wrap up!

Serverless let your apps handle any traffic without server management.

Use Lambda for APIs, but optimize memory to cut delays, ensuring fast post queries.

Use Edge for speed, but avoid heavy logic to prevent timeouts.

Deploying the NextJS app to Vercel is seamless but requires configuration for AWS Lambda.

Serverless isn’t free—watch usage and test deployments to keep our blog humming. There are too many horror stories out there with people racking up thousands of dollars bills.